Hello

Tutorial, Ubuntu 13.04 Apache2 setup public_html (local sites). This tutorial was done using a fresh install of Ubuntu 13.04.

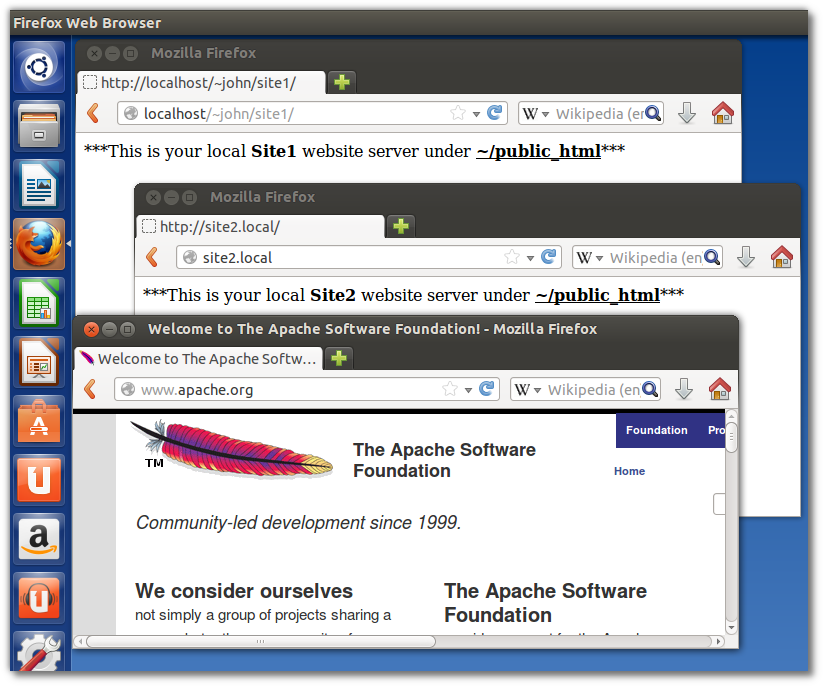

So what is this good for? Well my use case is that I have been doing more and more web development using Drupal and wanted to be able to create multiple development sites on my laptop. For instance, let say you have a couple of websites, site1.com and site2.net and you want to create a development environment locally on your laptop but under your user account. If your user name is John, you want to be able to put your sites inside your home folder like ~/john/public_html/site1 but then be able to just type the address “site1.local” on your browser to access the site. This makes developing with Drupal and Drush, much easier than trying to access the /var/www directory as a standard user.