In this video, Bill Gates, on reducing population by utilizing global warming/climate change as the reason. Reducing population is the main goal of the “global warming/climate change” initiative, to reduce the population of the world.

In this video, Bill Gates, on reducing population by utilizing global warming/climate change as the reason. Reducing population is the main goal of the “global warming/climate change” initiative, to reduce the population of the world.

An overview/meditation on the Jewish Sabbath and how it relates to the Christian life, especially to the Catholic Christian. What is the Sabbath, why we need to know the Sabbath and yet as Christians are no longer bound by it. Yet, it is still valid for the some Jews but not for Christians. Very Scripturally based and includes a copy of John Paul II Apostolic Letter; Dies Domini (The Lords Day)

There has always been something about the ever so popular “peace sign”, that looks somewhat like an upside down cross, that rubbed me the wrong way. I think the reason I always found it to be deficient in some way was in the way that most people espouse “peace” while using it, which is anything but! After all, “you shall know them by their fruit”!

It was only while looking through an old pocket size Missal from 1942 that it hit me like the V8 vegetable commercial, it is an Anti-Catholic symbol!

Hello

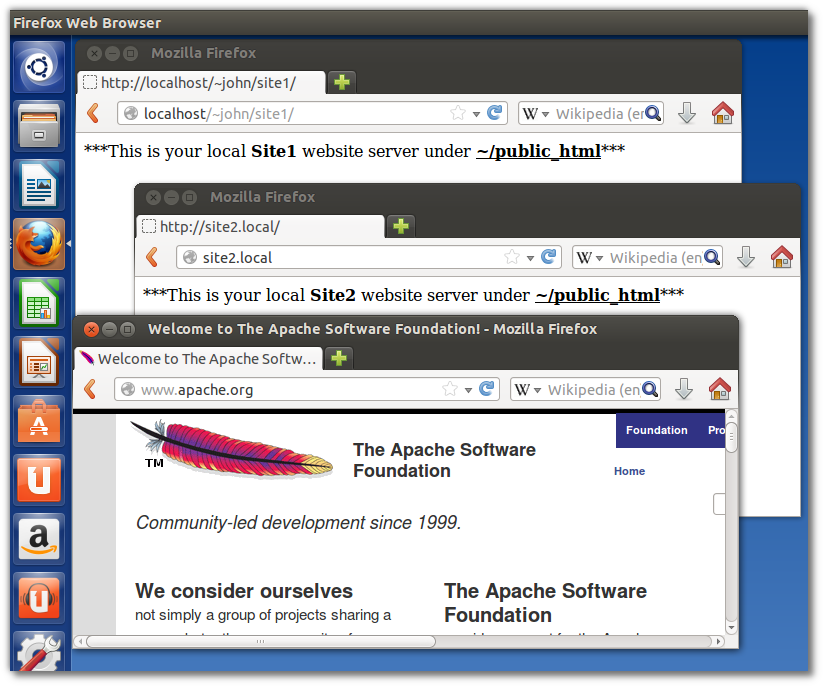

Tutorial, Ubuntu 13.04 Apache2 setup public_html (local sites). This tutorial was done using a fresh install of Ubuntu 13.04.

So what is this good for? Well my use case is that I have been doing more and more web development using Drupal and wanted to be able to create multiple development sites on my laptop. For instance, let say you have a couple of websites, site1.com and site2.net and you want to create a development environment locally on your laptop but under your user account. If your user name is John, you want to be able to put your sites inside your home folder like ~/john/public_html/site1 but then be able to just type the address “site1.local” on your browser to access the site. This makes developing with Drupal and Drush, much easier than trying to access the /var/www directory as a standard user.

http://tool.motoricerca.info/robots-checker.phtml

I found this tool for validating the Robots.txt while trying to figure out why my sites were getting crawled like crazy! I run three sites on one shared host account and it turns out that the robots.txt file has to be in the root directory of the host. For example, let’s say you have a default site called domain.bla which points to your default root directory of / (public_html/). But if you also have other sites in that root directory like public_html/domain2.bla/ the robots.txt file in the domain2.bla site is ignored because it is not in the root directory. So you have to customize the robots.txt in the root directory to cover all sites under the root directory.

This has greatly diminished the crawling of my sites.

May God bless!

Joao

UPDATE!!! The author of the robots.txt validator has created a new tool, see here: http://www.toolsiseek.com/robots-txt-generator-validator/